Consistent Microformats

Many people have written code to solve this problem. I do it in my library, Parse This. Aaron Parecki does it in his XRay library. The Microsub specification has a stricter jf2 output in order to simplify the client having to make all sorts of checks.

This is the point. It is easier to consume a clean and consistent parsed microformats structure. Some of this would probably be solved by some consensus on the matter.

So, what does Parse This, and its ilk do? I lack a name for this sort of code.

- It has two options: feed or single return

- Feed tries to identify and standardize an h-feed. This means if there are multiple top level h- items, it will try to convert it into an h-feed.

- Single will try to identify the top level h- item that matches the URL of the page.

- In both cases, it will run authorship discovery in order to find the representative author and add this as an author property to the h-feed, or single h-entry etc.

- It will try to run post type discovery.

Micropub 2.2.0 for WordPress Released

The IndieAuth plugin limits capabilities based on scope as of Version 3.5.0, so the capability checks will work perfectly.

On a practical note, this allows the code to be simpler on the Micropub side. The scope limiting code is unit tested now inside the IndieAuth plugin and will continue to be iterated on there.

This allows Micropub to focus on the publishing side of things. Also, there was a request to remove a dependency on IndieAuth.com as the default for the built-in code. The IndieAuth plugin has no external site dependencies by default.

IndieAuth 3.5.0 for WordPress Released

I also noted I forgot to describe this clearly in the readme, if people read changelogs and will have to correct that as well. I wrote some of this code in January and it was merged, but didn’t release it till July, so…

IndieAuth 3.5.0 implements scope support. Previously, scopes were handled by the Micropub plugin. It would check what scopes you had and implement accordingly. But WordPress does not have the concept of scopes. It has the concept of capabilities. And users have roles, which are collections of capabilities.

So Indieauth 3.5.0 implements scopes also as collections of capabilities. If you are doing a capability check in WordPress, and the request was authenticated with an IndieAuth token specifically, it will filter your capabilities by the ones defined in the scopes the token has.

That means if your user didn’t have the capability to begin with, you can’t use it. In a future version, I’ve considered on the authorization screen, not even issuing a token with that scope, but this is ultimately more secure than before.

It means that plugins don’t have to understand scope at all. They just have to enforce and support native WordPress capabilities, which they should anyway.

For now, the system only supports built-in capabilities, but there is nothing that says it cannot move to custom capabilities as needed, as everything is filterable and we accept pull requests.

The second big change I did mention in the changelog brings the code to support using a remote IndieAuth endpoint back into this code. However, it is disabled by default. This is based on the code removed from Micropub, which had a parallel IndieAuth class that was only used when the IndieAuth plugin was not enabled. By having it here, it allows anyone who wants to use it to enable it, but simplifies the experience for the bulk of users. It also allows it to be enhanced by any of the scope or other enhancements put into the main plugin.

The plugin also simplifies the site checks to ensure that your site will work with the plugin, putting them into the Site Health checks where they logically belong. This includes an SSL check and a Authorization header check.

People Seem Confused about IndieAuth

The biggest confusion seems to be conflating IndieAuth and IndieAuth.com. IndieAuth.com is a reference implementation of the protocol built by Aaron Parecki, who edited the IndieAuth specification. Aaron works extensively with OAuth as part of his day job.

OAuth is that technology you see all around the web. It allows you to log into one site using the credentials of another. So, “Sign in with Google”, “Sign in with Facebook”, etc. The site you signed into uses one of these sites to verify your identity.

IndieAuth is a layer on top of that. It allows you to sign in with your website. So, to login you provide the URL of that site, which represents your identity. The client goes and retrieves your site, and looks for hidden links to your IndieAuth endpoints and asks you to verify your identity to it. Then, once you have, it issues permission to the client to act as you, with whatever permissions you have approved.

IndieAuth.com, being a reference implementation, wouldn’t know how to verify your identity. So, it uses a workaround called RelMeAuth. If you put a link on your website to your GitHub account, or other sites that support OAuth, marked up in HTML with rel=”me”, it would go to the sites of those services it supported, check to see if, using the GitHub example, if your GitHub profile had a link back to your website. This would prove your GitHub account and your website were owned by the same person. Then if you could successfully authenticate to GitHub, it would then issue the client the permissions it requested.

Since for IndieAuth.com to work with your site, you had to link to it on your site in a certain way, designating it as the authentication endpoint for that URL, it meant that unless someone had the ability to edit your page(a bigger problem), they couldn’t use it to get into your site.

But IndieAuth.com isn’t meant to be a permanent service and the fact people think that IndieAuth.com = IndieAuth the protocol is a problem. It is meant to be a bridge for people.

So, I came in, naively, when I started using IndieAuth.com and said…I want to do the same thing, but I don’t want to log in using GitHub…I want to log in using my WordPress credentials. So, in 2018, I learned enough to write an IndieAuth endpoint for WordPress. So, you can, instead of putting indieauth.com as your provider, install a WordPress plugin and your site will become a provider.

Try to login with your URL and it will redirect to letting you login with WordPress, then issue credentials to the client in the form of a token that can be revoked from the WordPress admin.

But people continue to see IndieAuth as logging into other websites via IndieAuth.com and therefore via GitHub…that can certainly be a service and a thing you can do. However, that’s not IndieAuth.

So, going forward, I’ve decided that I’ll be disabling the code from the IndieWeb WordPress plugin that allows you to use IndieAuth.com in favor of the built-in solution. Those who want to use an external service will still be able to do so, but this will be an ‘expert’ feature. Because enabling a plugin and it just working is what most people want.

And if it doesn’t work, please report the bug and we’ll fix it.

Simple Location 4.1.6/7 Released

For various reasons, I was reluctant to do that. But I came up with another solution. Adding a map provider that supports a custom hosted service. In this case, a fork of a Static Maps API written 6 years ago by someone I know. You can find my fork here.

By using a self-hosted but external static map API, I keep the option out of the plugin for now. It will also work with anything that implements the same API.

In the same update, I also fixed a few bugs, and added moonrise and moonset calculations, which display along with sunrise, sunset, and moon phase in the Last Seen widget for now.

Simple Location 4.1.5 Released

This evening I released Simple Location 4.1.5. This was prompted by the realization that Wikimedia Maps was no longer permitting third-party usage.

- I replaced it with Yandex Maps, which doesn’t require an API key either for non-commercial use. I may additional Yandex services in future.

- I also added Geoapify as a map provider…I may also add in its geocoding service in future.

- Open Route Service, as it is an open initiative, is now supported as a geolocation/elevation provider. I like to support any open source options when I can.

- Preliminary Moon Phase calculation, though the formula is not up to my standards and needs to be replaced. I spent 20 minutes on it and I spent a week learning enough about how to adjust the sunrise and sunset based on location and elevation.

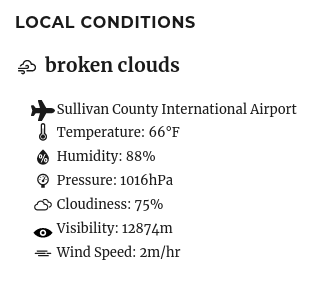

In the last major update to Simple Location, 4.1, I added in additional icon sets. In this version, I redid the presentation of all of the widgets to show additional properties and standardized all the widgets so they use the same display logic.

So what is next:

- In a previous version, I switched to storing everything in metric(meters, celsius, etc). I already convert back to imperial measurement for temperature if the configuration option is set. I still need to do so for other metrics…as I’m showing visibility and rain in metric still. I need to do the same on the backend. But as someone who uses Fahrenheit and Feet…I would use this.

- I also continue to think of what other weather parameters I can offer and display. Heat index, dewpoint, which are derived from the humidity and temperature, would be relatively easy to answer.

- Being able to pull in station data from my own weather station is still a goal. The issue with this is designing an implementation that is not limited to working only with my setup, and always pulls from my endpoint except if they are not available.

- I have the concept of zones for location, which are areas around a location. Anything inside of a zone will be hidden on a post, and the label replaced with the zone name.

- A long time ago, I declared I wanted to add venues for location, which would essentially be to allow for archive pages for locations.

- Now I’m looking at stations, which would be a fixed weather location.

The above three things are too similar for me to feel comfortable going any further till I think about this. Zones may need to stay separate, as they are a privacy matter. But stations and venues are both public items…but no one posts from a weather station…they might post from a venue…so maybe I should build stations regardless.

If I do build the station model, it would likely merge custom stations like mine with station IDs from the Met Office, National Weather Service, OpenWeatherMap and AerisWeather, as well as any future services that support weather stations, and allow those to be kept in a list. It would then have to determine if it was close to such a station…and use that data. Not quite sure how to do that simply.

Thoughts on IndieWebCamp West

We’ve tried online IndieWebCamps before, but I felt we missed some opportunities in the past. So, we tried some new things. The whole event was conducted using Zoom.

- A Cook Your Own Dinner pre-party where we shared a meal together and socialized.

- A room serving as a ‘Hallway Track’ for off-topic chatting

- Two rooms with 4 each.

The sessions were a good mix of conversation types. And even afterward, I found myself sitting up each night after the event just chatting with people. It was the first time I feel we captured more of the in-person Indieweb feeling by adding in those social opportunities.

Hoping for an East Coast timezone event later this year, and some popup sessions in between, to keep things alive. If not for the current state of the world, we might have had one IndieWebCamp a month in 2020. We’d already had one in February, an Online one, and one in March that was converted into a remote event last minute.